INTERNATIONAL INVESTMENT

AND PORTAL

The new Law on Artificial Intelligence governs the activities of researching, developing, providing, deploying and using AI systems while explicitly excludes the same when being utilised for the sole purpose of national defence and security.

Managing partner Dang The Duc and senior associate Thai Gia Han of Indochine Counsel

Managing partner Dang The Duc and senior associate Thai Gia Han of Indochine Counsel

Apart from Vietnamese entities (including agencies, organisations and individuals), foreign organisations and individuals participating in AI activities in Vietnam shall be subject to the law’s governing scope.

Reading from the current wording of articles 1 and 2, it can be interpreted that even when an AI system is developed completely offshore by a foreign entity, the providing of such AI system for use in Vietnam is still likely to be governed by the AI law.

The AI law defines “AI system” as “machine-based system, designed to perform AI capacities with different levels of autonomy, having self-adopt ability upon deployment; basing on target explicitly determined or implicitly formed, such system deduces from input data to create output data such as predictions, content, recommendations, or decisions that may affect the physical or virtual environment”.

The definition clearly reflects reference to global concepts in terms of basic nature of an AI system, showing the effort of Vietnam to align with the international standards in the field.

The AI law also clearly identifies the key parties involved in AI activities and AI systems, with the primary objective of allocating corresponding rights and obligations throughout the AI lifecycle. Under the law, a “developer” refers to any organisation or individual that designs, develops, trains, or materially modifies an AI system, including through the selection of algorithms, model architectures, training methodologies, or training data, whether for its own use or for provision to third parties.

A “provider” is defined as any entity that supplies, distributes, places on the market, or otherwise makes available an AI system to another party on either a commercial or non-commercial basis, including via software licensing, cloud services, APIs, or embedded solutions.

A “deployer” is any organisation or individual that integrates, implements, or uses an AI system within its own operations or products and exercises control over the purpose, context, or manner in which the system is applied, while a “user” is a natural or legal person that interacts with or benefits from AI-generated outputs without controlling the system’s design or operation.

Most importantly, the AI law consistently emphasises the ethical element and human rights protection relating to AI activities. Accordingly, human must always be regarded as the centre for all AI activities, while imbruing the idea that AI system should be regarded as an assisting tool instead of human replacement.

Classification and compliance

In comparison with earlier draft version, the AI law adopts a functional and technological-neutral definition of AI, aiming at future-proofing itself against rapid technological changes, ensuring to capture any new AI techniques to be developed in the future.

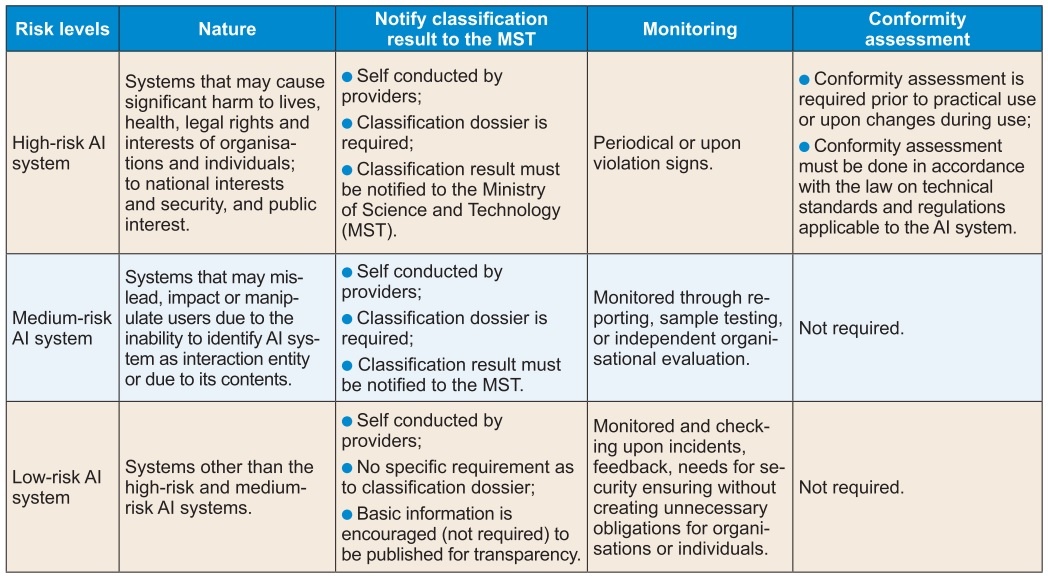

This approach also facilitates the risk level-based classification of AI system as adopted under the AI law, leveraging the identification and assignment of respective compliance obligations (see Table).

The risk-based classification of AI systems is assessed by reference to several key criteria, including the extent of their impact on human rights, safety, and security. The sector in which the system is deployed, particularly where such sector constitutes an essential service or directly relates to public interests, as well as the scope of users and the scale of the system’s potential impact are also taken into consideration.

Meanwhile, transparency obligations applicable to AI systems vary on particular role towards such system, from which we can interpret that these obligations apply to AI systems, regardless of their risk level.

Data and human rights

Data protection, ethical standards, and human rights safeguards are priorities that are clearly reflected in the AI law, which dedicates Chapter V in its entirety to ethics and responsibilities in AI activities. By elevating these issues into a standalone chapter, the legislators signal that ethical compliance and human-centric safeguards are fundamental to AI governance, rather than merely supplementary to technical or security requirements.

High-level principles and responsibilities applicable throughout the AI system lifecycle have been provided, including the obligation to respect and protect human rights, prevent discriminatory or biased outcomes, and ensure an appropriate level of transparency and human oversight, particularly where AI systems may materially affect individuals or operate in high-risk contexts.

These principles are closely linked to data protection requirements, reinforcing expectations around lawful data use, data quality, and accountability in AI-related activities.

A national AI ethical framework is to be issued and periodically reviewed and updated upon significant technical, legal and practical managerial changes. Such a framework is set to be the orientation for all development of professional standards and guidelines in the field. Yet, the applicable of the framework will only go as far as encouragement, reading from the current wording of the AI law.

From a practical perspective, it can be interpreted that AI compliance will be assessed not only on the basis of technical performance, but also on the societal and individual impacts of AI deployment. Businesses engaging in AI activities should therefore integrate ethical and human rights considerations into their internal governance and compliance frameworks from an early stage.

The AI law marks a significant step in Vietnam’s effort to establish a comprehensive and risk-based regulatory framework for AI. While a number of implementing details remain subject to further guidance, clear signals have been provided that AI compliance will extend beyond technical requirements to encompass accountability, data governance, and responsible deployment throughout the AI lifecycle.

For AI systems launched before the AI law’s effective date, compliance obligations thereunder are still required, with a particular grace period of 18 months applicable to AI systems in the medical, educational and financial fields, and 12 months for AI systems in other fields.

Businesses engaging in AI activities should therefore begin with early preparation to ensure timely alignment and mitigate regulatory risk as the instrument officially takes effect.